Social media logos. Jaap Arriens

In the early days of social media, writers and journalists around the world extolled its power as the Arab Spring woke up.

Now, in the era of covid-19, experts warn against misinformation about the pandemic or infodemic, which abounds on social networks.

What has changed in this decade?

How do we now understand the role of social platforms and remain alert to the damage that their algorithms perpetrate?

Networks and digital activism

The networks promised to have better connections and to expand the speed, scale and reach of digital activism.

Before they existed, organizations and public figures could use mass media, such as television, to spread their message to the general public.

The media were the filters that allowed information to be disseminated according to established criteria to decide which news was a priority and how it should be delivered.

At the same time, citizen communication, among equals, was more informal and fluid.

The networks blur the boundaries between the two types and offer the best connected the possibility of being opinion creators.

Twenty years ago there were no media capable of raising awareness and mobilizing for a cause with the speed and scale provided by the networks, in which, for example, the #deleteuber label (erase Uber) caused 200,000 accounts to be eliminated in a single day of the transportation application, accused of "thwarting" a strike against Trump's immigration veto in 2017. Before, for citizen activism to triumph, years of negotiations between companies and activists were necessary.

Today, a single tweet can subtract millions of dollars from a company's stock market value or cause a government to change its policies.

Towards radicalization

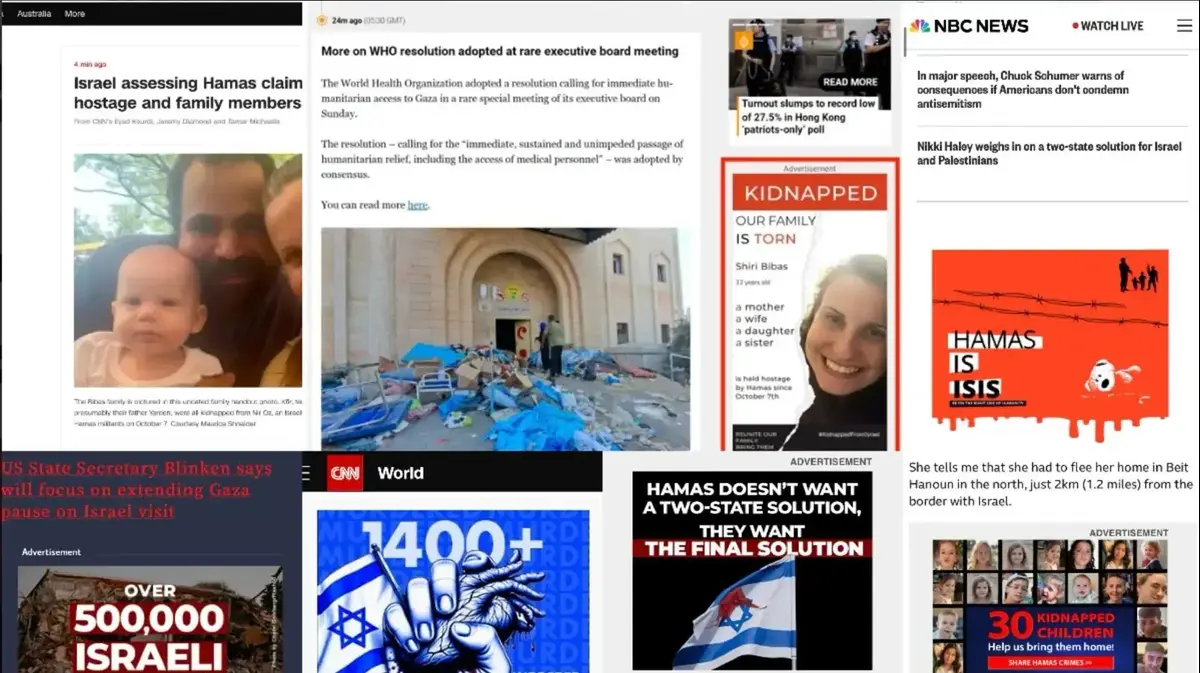

While such an opinion-maker role allows for unimpeded civic discourse that can be positive for political activism, it also makes people more susceptible to misinformation and manipulation.

The algorithms on which social media news updates are based are designed for constant interaction, to achieve maximum engagement.

Most major technology platforms operate without the filters that control traditional sources of information.

That, together with the large amounts of data that these companies handle, gives them enormous control over how the news reaches users.

A study published in the journal

Science

in 2018 proved that false information on networks spreads faster and reaches more people than real information, often because the news that arouses emotions is more seductive and, therefore, is more likely than we share them and amplify them through the algorithms.

What we see on our networks, including advertising, is thought based on what we have said we like and our political and religious opinions.

Such personalization can have many negative effects on society, such as voter deterrence, misinformation directed at minorities, or advertising targeted on discriminatory criteria.

The algorithmic design of Big Tech platforms prioritizes new and micro-directed content, leading to an almost limitless proliferation of misinformation and conspiracy theories.

Apple CEO Tim Cook warned in January: "We cannot continue to ignore a theory of technology that says any form of engagement is good."

These models based on participation have as a consequence the radicalization of cyberspace.

Networks provide a sense of identity, purpose, and bond.

Who publishes conspiracy theories and contributes to misinformation also understands the viral nature of networks, where disturbing content generates more participation.

Six keys to how algorithms govern us

Coordinated actions in networks can disrupt the collective functioning of society, from financial markets to electoral processes.

The danger is that a viral phenomenon, accompanied by the recommendations of algorithms and the resonance box effect of the networks, ends up creating a cycle of filter bubbles that feed back and push users to express increasingly radical ideas.

Let's educate about algorithms

Rectifying algorithmic biases and providing better information to users would help improve the situation.

Some types of misinformation can be solved with a mix of government regulations and self-regulation to ensure that content is monitored more and misleading information is better identified.

To do this, technology companies must agree with the media and use a hybrid of artificial intelligence and detection of false information, with the collective collaboration of users.

One way to solve several of these problems would be to use better bias detection strategies and offer more transparency about the algorithm's recommendations.

Actually, what [...] exactly is an algorithm?

But it is also necessary to educate more about networks and algorithms: that users know to what extent the personalization and recommendations designed by big technology configure their information ecosystem, something that most people do not have enough knowledge to understand.

Adults who mainly inform themselves through social networks are less aware of politics and current affairs, according to a survey by the Pew Research Center in the US.

In the era of covid-19, the World Economic Forum speaks of

infodemic

.

It is important to understand how platforms are deepening the divisions that already existed, with the possibility of causing real damage to users of search engines and social networks.

In my research, I have found that depending on how the platforms provide their responses to searches, a more health-savvy user is more likely to receive helpful medical advice from a reputable institution like the Mayo Clinic, while the same search , made by a less informed user, will direct you to pseudo-therapies or misleading medical advice.

Big tech companies have unprecedented social power.

Their decisions about what behaviors, words, and accounts to authorize and what not to dominate billions of private interactions, influence public opinion, and affect trust in democratic institutions.

It is time to stop seeing these platforms as mere for-profit entities and know that they have a public responsibility.

We need to talk about the impact of the ubiquitous algorithms in society and be more aware of the damage that they can cause due to our excessive dependence on big technology.

Anjana Susarla holds the OmuraSaxena Chair in Artificial Intelligence at the Eli Broad College of Business at Michigan State University.

Translation by María Luisa Rodríguez Tapia.