OpenAI breaks the dikes.

The American company, specialist in artificial intelligence, now allows any Internet user to use its impressive Dall-E tool.

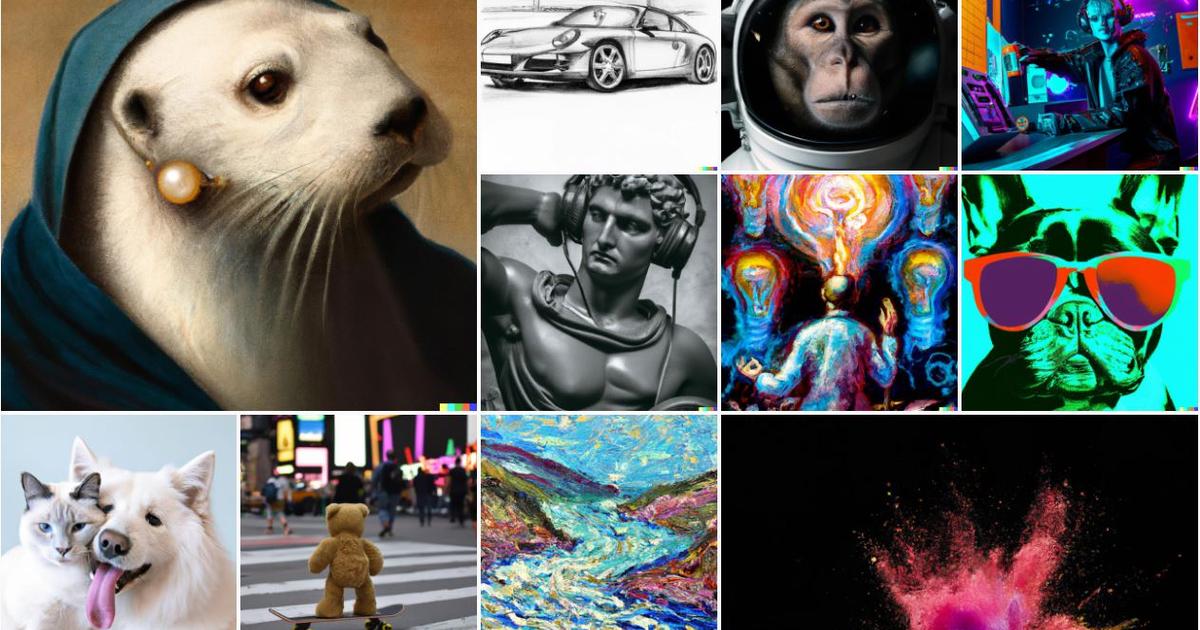

This deep neural network is capable of generating high-resolution images in seconds from a textual request such as "

two people talking on the terrace of a Parisian café, in autumn, in the manner of Gauguin. "

»

Access to Dall-E was until now only reserved for professionals, who had to register on the waiting list.

Prior to opening to the general public, 1.5 million people were generating 2 million images every day on the OpenAI website.

Read alsoDall-E, the AI that is revolutionizing visual creation

Three images generated by Dall-E with the query “two people talking on the terrace of a Parisian café, in autumn, Gauguin style” Open AI

The general public had already been able to taste artificial intelligences that create images this summer thanks to free sites such as Craiyon, with often hilarious results.

But Dall-E's neural network is more powerful and capable of creating professional-looking visuals, some of which have already made magazine covers.

Read alsoCraiyon, the automated image generation tool that social networks love

Filters to avoid drifts

OpenAI has restricted the use of Dall-E.

Upon registration, new users get a credit of 50 free requests.

Then, they will be entitled to 15 free text queries per month.

Beyond that, you will have to pay.

The price, not decreasing, is 15 dollars for 115 credits.

The American company had initially taken a cautious approach by limiting access to Dall-E, for fear that its tool could be used by malicious people for the purposes of disinformation, propaganda or cyberbullying.

This gradual opening “

has allowed us to strengthen our security systems.

Our filters have become more robust and reject requests aimed at creating visuals that are violent, sexual, or that violate our content policy

,” explains OpenAI.

For example, it is impossible to create images around the war in Ukraine or public figures.

OpenAI is also trying to correct the biases of its artificial intelligence, which reproduces the clichés to which it has been exposed by “digesting” huge visual databases.

For example, if the user does not specify the gender or ethnic origin of the characters he wishes to see on his image, OpenAI will add the terms "woman", "black" or "Asian" to the request in order to diversify the results.

/cloudfront-eu-central-1.images.arcpublishing.com/prisa/KMEYMJKESBAZBE4MRBAM4TGHIQ.jpg)