Enlarge image

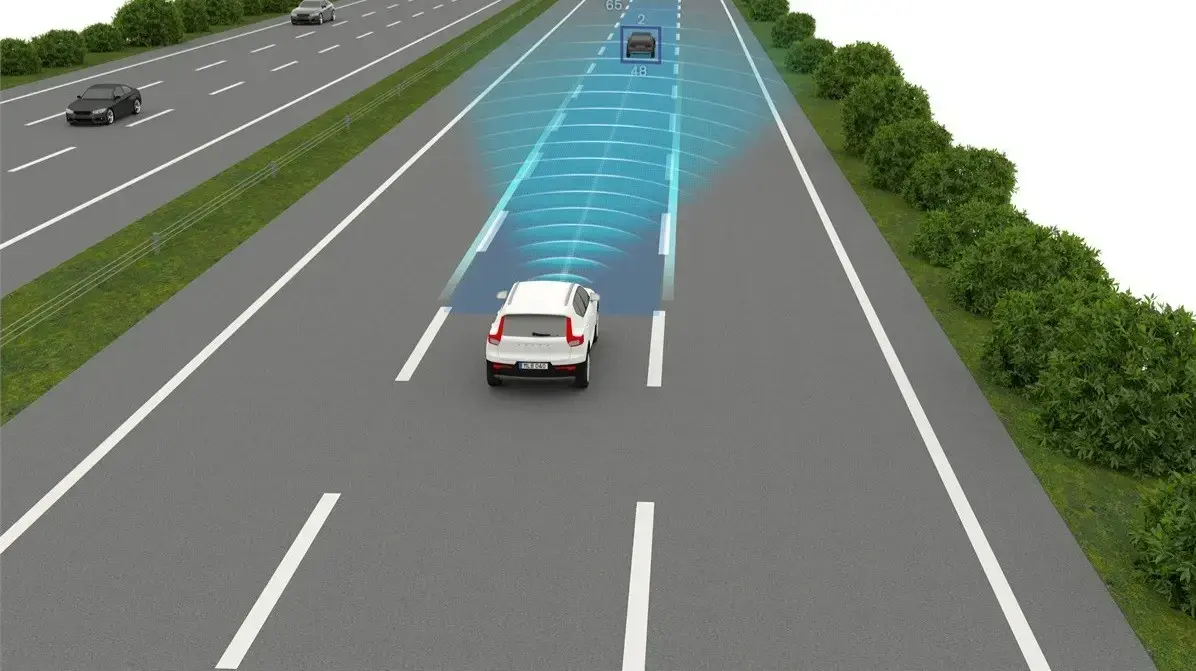

Hands-free: Demonstration of the Autopilot system in a Tesla Model S in New York (picture from 2016)

Photo: Christopher Goodney/Bloomberg/Getty Images

Tesla has staged a promotional video to mimic capabilities of its self-driving technology, according to a senior engineer.

The video, archived on Tesla's website, was released in October 2016.

Tesla CEO Elon Musk hailed it on Twitter at the time as proof that "Tesla drives itself on city streets and freeways and finds a parking space (without any human intervention)".

In fact, however, Tesla employees had to intervene several times during the test drives with the Model X in order to take control.

During a parking exercise not shown in it, a car crashed into a fence on the company premises.

The system would not have been able to stop at a red traffic light and then accelerate without help.

That's what Ashok Elluswamy, head of software development for Tesla's Autopilot driver assistance system, said in a California court.

The previously unpublished copy of Elluswamy's statement dates from the end of June 2022 and is available to SPIEGEL.

A lawsuit was filed in Santa Clara District Court against Tesla over a 2018 accident in which a former Apple engineer died in his Tesla while it was on autopilot.

»The car drives itself«

The video, which shows a ride between a house in Menlo Park to Tesla's former headquarters in Palo Alto, California to the music of Rolling Stones, begins with the words: "The person in the driver's seat is only there for legal reasons.

she does nothing

The car drives itself.«

Elluswamy said Tesla's Autopilot team wanted to demonstrate its system's capabilities at the request of CEO Elon Musk.

When asked if the 2016 video showed the performance of the Tesla Autopilot system available in production vehicles at the time, Elluswamy replied, "It doesn't." But that wasn't the intention either.

The "New York Times" had already reported on the background of the video in 2021.

Citing anonymous sources, the newspaper wrote that Tesla engineers were silent about the accident involving a test vehicle and the fact that they created a 3D map of the drive.

This enabled the vehicle to navigate the selected route more safely than any road.

Elluswamy's testimony confirmed this information for the first time by a company representative.

Survivor's attorney calls video 'misleading'

Elluswamy was interviewed by Andrew McDevitt, the lawyer for the widow of Apple engineer Walter Huang who died in an accident.

The lawyer told Reuters that the video was "manifestly misleading, with no disclaimer or asterisk."

The US National Transportation Safety Board concluded in 2020 that the accident was likely due to Huang being distracted, but also due to deficiencies in the autopilot system.

Tesla's "ineffective monitoring of driver intervention" contributed to the accident.

The company commented at the time that the driver was aware that the system was not perfect.

Tesla has been marketing the assistance system under the name Autopilot since 2015 and a newer version even as FSD (for “Full Self Driving”).

Officially, however, the company emphasizes that drivers must keep their hands on the steering wheel and remain in control of the vehicle at all times.

The technology is designed to assist with steering, braking, speed and lane changes.

However, these functions "do not make the vehicle autonomous," according to the company on its website.

Tesla sells the unlocked system (FSD currently costs $15,000 in the US) but declares it to be a beta version.

The paying customers are also test drivers for a technology that is not yet fully developed.

Tesla is betting that safety will improve as more driving data is collected.

However, its use is already improving the number of accidents because the system still causes fewer accidents than people behind the wheel.

Mahmood Hikmet, who works as a developer for the New Zealand robotic car company Ohmio, was appalled by the court record on Twitter.

Elluswamy himself stated that he did not know what the basic terms of autonomous driving and software development meant.

Tesla is facing multiple lawsuits and regulatory investigations over its driver assistance systems.

In 2021, the US Department of Justice opened a criminal investigation into a series of accidents.

On average, an autopilot-related accident is reported every day, reports the New York Times.

The assistance system now switches itself off if it does not register regular feedback from the driver.

Elluswamy said drivers can "fool" the system with small movements of the steering wheel.

However, if drivers pay close attention, there is no safety problem with autopilot.

uhh/Reuters