Artificial intelligence (AI) poses an "extinction risk" for humanity, as do catastrophes such as nuclear war or a pandemic. Those are the conclusions of a group of 350 executives, researchers and engineers who are experts in this technology in an open letter of just 22 words published Tuesday by the Center for AI Safety, a nonprofit organization. "Mitigating the risk of AI extinction should be a global priority along with other risks on a societal scale, such as pandemics and nuclear war," cites the statement that has been signed, among others, by the senior executives of three of the main artificial intelligence companies: Sam Altman (executive chairman of OpenAI), Demis Hassabis (Google DeepMind) and Dario Amodei (Anthropic). Signatories also include researchers Geoffrey Hinton and Yoshua Bengio, who are often considered godfathers of the modern AI movement. Hinton left Google a few weeks ago, where he occupied a vice presidency, because he believes that this technology can lead us to the end of civilization in a matter of years, as he confessed to EL PAÍS.

Geoffrey Hinton: "If there's any way to control artificial intelligence, we need to figure it out before it's too late"

The statement comes at a time of growing concern about a rapidly developing sector that is difficult to control. Sam Altman himself had already spoken out on this issue during his appearance before the US Senate, when he recognized the importance of regulating generative artificial intelligence. "My worst fear is that this technology will go wrong. And if it goes wrong, it can go very wrong," he said just two weeks ago during the first AI hearing on Capitol Hill. The father of OpenAI — the company that has developed ChatGPT, the most popular and powerful artificial intelligence program in this field — added that he understood that "people are eager about how [AI] can change the way we live," and that for this reason it is necessary to "work together to identify and manage the potential disadvantages so that we can all enjoy the tremendous advantages."

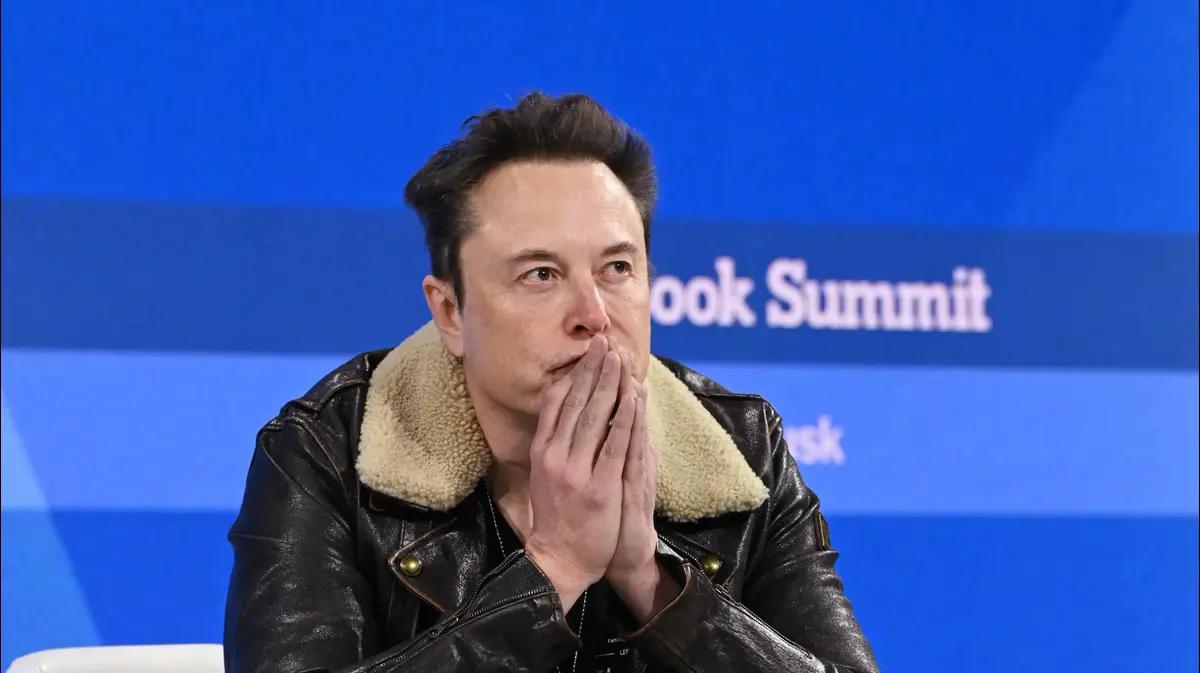

It was not the first time that one of the entrepreneurs most involved in this technology made statements of this magnitude regarding the future of AI. In March, more than a thousand intellectuals, researchers and entrepreneurs had signed another open letter in which they asked to stop for "at least six months the development of AI systems more powerful than GPT4", the latest version of ChatGPT. In the letter, the signatories warned that OpenAI's tool is already capable of competing with humans in a growing number of tasks, and that it could be used to destroy jobs and spread disinformation. "Unfortunately, this level of planning and management is not happening, even though in recent months AI labs have entered an unchecked race to develop and deploy increasingly powerful digital minds that no one, not even their creators, can reliably understand, predict or control," the letter said. which was also signed by the tycoon Elon Musk, founder of Tesla and SpaceX and owner of Twitter, who is also one of the founders of OpenAI.

Another voice of alarm in this field was that of the British Geoffrey Hinton, who at the beginning of May left his job at Google to be able to warn more freely of the dangers posed by these new technologies. "From what we know so far about the functioning of the human brain, probably our learning process is less efficient than that of computers, he confessed a few weeks ago to EL PAÍS. Hinton said in that same interview that there are "five to 20 years" left for artificial intelligence to surpass human intelligence. "Our brains are the fruit of evolution and have a number of built-in goals, such as not hurting the body, hence the notion of damage; eat enough, hence hunger; and make as many copies of ourselves as possible, hence the sexual desire. Synthetic intelligences, on the other hand, have not evolved: we have built them. Therefore, they do not necessarily come with innate goals. So the big question is, can we make sure they have goals that benefit us?" he said.

You can follow EL PAÍS Tecnología on Facebook and Twitter or sign up here to receive our weekly newsletter.

/cloudfront-eu-central-1.images.arcpublishing.com/prisa/RXNK7PQS37JW5SJWVO63BJDJGM.jpg)