At your next job interview, your profile may be discarded because of a facial microexpression that a machine deems inappropriate. Or that they stop you while queuing at US customs when an artificial intelligence system considers that the gestures on your face are typical of someone who is about to attack. These are two examples of the application of so-called emotion detection systems (

affect recognition

). It is a controversial technology supported by machine learning algorithms that claims to be able to recognize what a person feels just by analyzing their face. It is one more step in the career of facial recognition. After identifying individuals when processing an image, it is now time to detect and classify the feelings revealed by their faces.

The technology is controversial because it has a serious underlying problem: the theories on which these applications are based are outdated. Emotions are not translated into universal facial expressions that can be parameterized. This has been scientifically proven thanks to two groups of researchers. One is at Yale University and the other is led by psychology professor José Miguel Fernández Dols, from the Autonomous University of Madrid. The latter has just published in the scientific journal

Emotions

, one of the most important in this discipline,

an article in which he reviews all the research that has been done in this field and that underpins the conclusion he has reached in recent years: it is impossible to know what a person is experiencing by looking only at their face. The context is decisive to interpret any grimace or wink. And that contextual and cultural information does not enter into the analysis of artificial intelligence systems.

Despite this, these systems are experiencing rapid expansion. Companies like Amazon (Rekognition), Microsoft (Face API), Apple (which bought the

startup

Emotient) or IBM have their own developments in this regard. Although of eminently military origin, this technology is widely used in personnel recruitment processes. Also, in educational contexts (to find out if students pay attention in class or are bored), surveillance at borders and even in some European football stadiums to prevent fights.

Personnel selection is one of the areas in which these systems grow the most. The London

startup

Human, for example, analyzes videos of interviews and ensures that it can identify expressions of emotion and relate them to character traits to finally score the candidate on traits such as honesty or passion for work, according to the

Financial Times

. Another company, HireVue, which has Goldman Sachs, Intel or Unilever among its clients, has designed a system to infer the suitability of the candidate for the position from their facial expressions compared to those of the highest performing workers.

“Emotion detection systems are usually applied in selection processes for organizations or companies that have to recruit a large number of people continuously, mainly for low-skilled and low-paid positions”, illustrates Lorena Jaume-Palasí, consultant and founder of The Ethical Tech Society, a multidisciplinary algorithmic ethics analysis organization.

This Spanish based in Berlin made a report for the German government in which she examined precisely the operation of these models.

He saw that they are used from Ikea to oil companies, but also by the EU itself.

A mistake that dates back to the sixties

The idea of inferring human behavior from people's appearance is not new.

“It is linked to phrenology, the pseudoscience that tried to link cranial geometry to certain behaviors.

And to other currents that always hierarchized the human being in categories, being the white man the top of the pyramid”, indicates Jaume-Palasí.

In 1967, an American psychologist named Paul Ekman traveled through the mountainous areas of Papua New Guinea, showing flashcards with pictures of people expressing astonishment, happiness or disgust in the most remote indigenous villages.

I wanted to show that all human beings have a small number of emotions that are innate, natural, globally recognizable and cross-cultural.

A few years later, Ekman became a celebrity at the Academy.

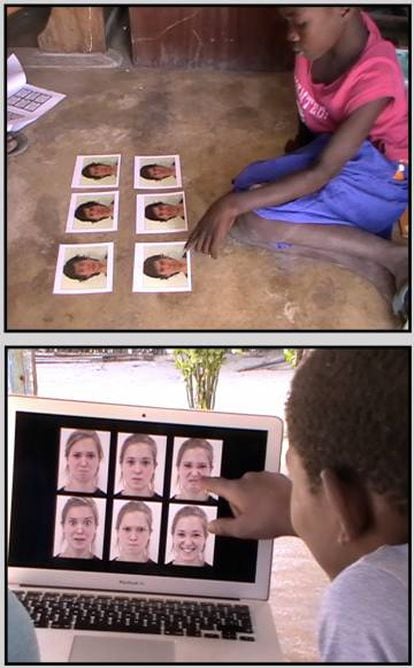

Two participants in the study conducted by Fernández Dols' team in Matemo, Mozambique.C. Crivelli

His theory is based on two pillars. The first is that any person is capable of recognizing certain expressions. In other words, if someone from a relatively isolated society is shown a photo with a smile, they will say that the person in the image is happy. These conclusions are based on a number of studies that have been done in developed countries and on Ekman's work in Papua New Guinea. “What is happening is that for about 20 years new methodologically better research has been developing that is showing that this recognition is not universal, but that it depends on cultural factors”, describes Fernández Dols. "If you go to Papua or to certain parts of Africa you realize that this supposed universal recognition does not exist," he stresses.

That is precisely what his team has done. He repeated Ekman's experiment in Papua and on an island in Mozambique, but unlike him, Fernández Dols was accompanied by an expert in the language and culture of the area, a detail that changes everything. Lisa Feldman and Maria Gendron of Yale University did the same thing in remote villages in Namibia and Tanzania and came to the same conclusions. “Oddly enough, more rigorous cross-cultural research had not been done since the 1960s. Ours and Yale's was the first”, he adds.

The second pillar of Ekman's theory is that people actually draw these supposedly universal expressions on their faces when they are experiencing an emotion: they smile when they are happy, they frown when they are dissatisfied, and so on. This is more difficult to verify, but Fernández Dols has been working on it for years. “What we have seen is that when you manage to record the expression of people in situations in which they report feeling a certain emotion, the expressions that are supposed to be given in the face of certain emotions do not appear,” he explains.

You can smile and be sad or embarrassed.

Moreover, a smile does not mean the same thing everywhere.

A study conducted at Yale simulated job interviews with young girls.

“The interviewer made inappropriate remarks to the girls and at the same time their behavior was recorded, and most were found to be smiling in an obviously stressful and unpleasant situation.

You also smile when you are in a hurry or stressed, or in humorous situations that do not necessarily mean that you are happy”, the professor abounds.

A convenient simplification

But Ekman's theories are so compelling that they have found a strong foothold in artificial intelligence applications. "Ekman's model provides two essential things for

machine learning

systems : a finite, stable, and discrete set of labels that humans can use to categorize photos of faces, and a system for measuring them," writes Kate Crawford in her book

Atlas of Faces.

AI

(Yale University Press, 2021). "It allows you to eliminate the difficult work of representing people's inner lives without even asking them how they feel."

According to the Australian author, emotion detection systems are closely linked to the military and security fields. They drank from funding from US intelligence services during the Cold War, which strove to develop the field of computer vision (the recognition of images by computers). Following 9/11, investigative programs used to identify terrorists and detect suspicious behavior proliferated.

The problem is that, because they have a base error, these systems often fail.

And they tend to harm the usual.

A study from the University of Maryland shows that some facial recognition systems interpret negative emotions, particularly anger or contemptuous looks, more frequently in the faces of black people than in those of white people.

These systems have biases.

And what they lack for sure is a scientific basis.

You can follow EL PAÍS TECNOLOGÍA on

and

or sign up here to receive our

weekly newsletter

.